If you’re managing a website, you’ve probably heard the term robots.txt, but you might not fully understand its essential role in your site’s visibility. This simple text file acts as a gatekeeper between your website and search engine crawlers, determining which parts of your site they can access and index. While the text file seen simple, improper configuration can lead to serious SEO issues, potentially hiding important content from search engines or exposing sensitive areas of your website. In this article, explore how you can use this powerful tool to optimize your site’s crawlability and search performance.

Table of Contents

What Is a Robots.txt File?

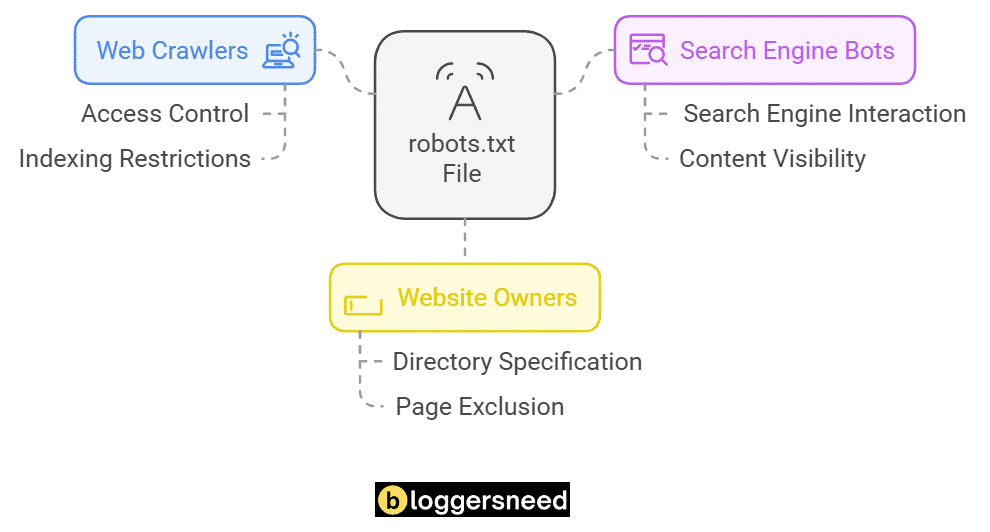

A robots.txt file, located in your website’s root directory, provides essential instructions for web crawlers and search engines, guiding them on how to efficiently interact with your site’s pages during crawling activities.

As part of the standard protocol known as the Robots Exclusion Protocol (REP), your robots.txt file tells crawlers which areas of your site they can access and which ones they can’t. This has a direct SEO impact on how search engines index your content.

You’ll find this file at the root level of your domain (e.g., www.yourwebsite.com/robots.txt), where it serves as the first point of contact between your site and visiting web crawlers, helping maintain efficient site indexing.

Why Is Robots.txt Important?

The robots.txt file is essential for optimizing website crawling and indexing, as it helps search engines prioritize valuable content, enhances server performance by limiting access to resource-heavy pages, and provides better control over search result visibility.

Beyond technical advantages, robots.txt serves as your first line of content protection, allowing you to shield sensitive areas of your website from search engine visibility.

It’s worth noting that proper implementation helps avoid common misconceptions, like believing robots.txt completely blocks human access or guarantees absolute content security.

Understanding Robots.txt Syntax

Basic robots.txt syntax follows a structured format that you’ll need to master for effective crawler control.

The robots directives explanation starts with understanding the key components: user agent specifications, disallow rules, allow exceptions, and sitemap declarations.

When creating a robots.txt file, specify which search engine bots your rules apply to, placing each User-agent directive on its own line with consistent indentation for clarity.

Listed below are the key components of robots.txt files.

- Disallow

The disallow rules overview helps you block specific directories, affiliate links, or pages from crawling, - Allow

while allow exceptions clarification lets you permit access to certain pages within blocked sections. - Sitemap

Sitemap URL helps search engines discover and index your pages more efficiently.

How to Create Robots.txt File?

Creating your robots.txt file follows a straightforward process that anyone can do it easily.

Follow the below steps to create robot.txt.

Go to computer and open notepad or basic text editor

Now go and paste the below code on the text editor or use your own directives and save it with the exact name “robots.txt”.

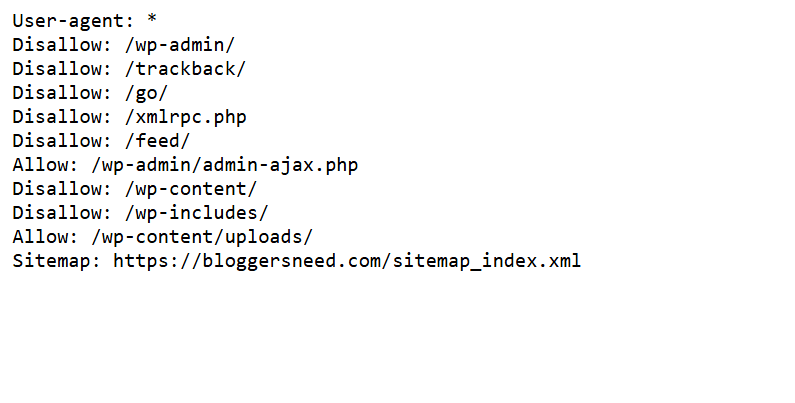

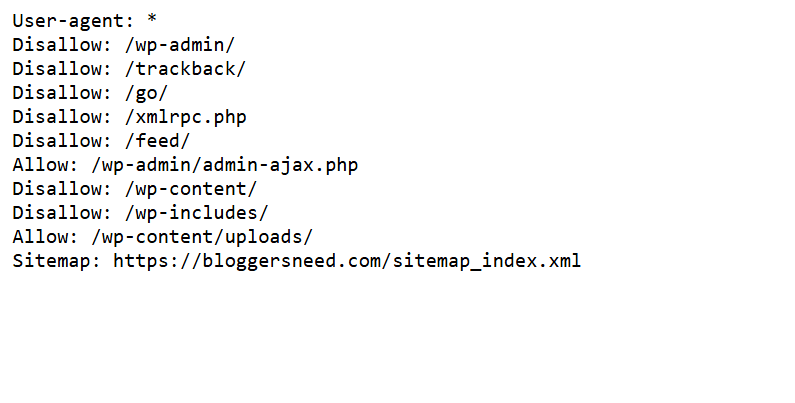

User-agent: *

Disallow: /wp-admin/

Disallow: /trackback/

Disallow: /go/

Disallow: /xmlrpc.php

Disallow: /feed/

Allow: /wp-admin/admin-ajax.php

Disallow: /wp-content/

Disallow: /wp-includes/

Allow: /wp-content/uploads/

Sitemap: https://yourdomain.com/sitemap_index.xmlOnce you’ve created the robots.txt file, upload the file directly to your website’s root directory. To do it, visit your web hosting’s cPanel, click File Manager, and navigate to the public folder and upload the file.

How to Test and Validate Your Robots.txt File?

Once your robots.txt file is in place, validating its functionality becomes your next priority.

To verify it’s working correctly, check its accessibility by viewing it in your browser’s incognito mode. Simply add “/robots.txt” to your domain name, and you should see your file’s contents displayed, confirming proper implementation.

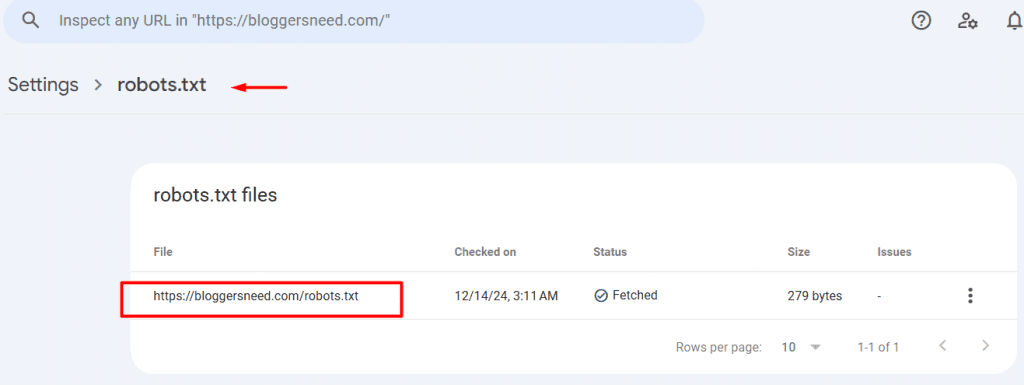

To verify your robots.txt directives effectively, utilize Google Search Console’s built-in testing feature, which allows you to identify common syntax errors such as missing slashes, incorrect user-agent specifications, or improperly formatted disallow commands.

Follow the below methods when troubleshooting are needed.

- Start by checking if your robots.txt file is accessible at yourdomain.com/robots.txt.

- To resolve crawling issues, check your directives to ensure they are not accidentally blocking essential content.

- Use Google’s Mobile-Friendly Test to verify that your robots.txt file allows mobile crawlers to access your site effectively.

Regularly testing the robots.txt file is essential for maximizing search engine accessibility and safeguarding sensitive content.

What Are Some Tips for Optimizing Your robots.txt File?

Implementing best practices in your robots.txt file significantly enhances your website’s crawl efficiency and search performance, while also preventing common SEO pitfalls.

Listed below are some of the tips for optimizing your robots.txt file.

- Keep your file structure clean and organized, using clear directives and avoiding redundant rules. Monitor crawler behavior regularly to verify your directives are being followed correctly.

- Managing files requires maintaining current paths, removing outdated disallow rules, and using wildcards judiciously.

- To ensure effective indexing and enhance search engine understanding of your website, avoid blocking essential resources.

- Don’t forget to regularly audit your robots.txt file, update it as your site evolves, and test changes before implementing them.

What Are Some Advanced Techniques for Using robots.txt?

Advanced robots.txt implementation offers precise control over web crawler interactions, utilizing specialized directives and conditional statements to optimize search engine access to your site’s content.

Start by implementing crawl delays to control how quickly specific user agents can access your pages.

Incorporating dynamic variables allows crawlers to adjust their behavior based on specific patterns and conditions, enabling targeted rules to be applied at particular times or to designated sections of your website.

Consider integrating your sitemap directly within robots.txt for improved crawler efficiency. You can also specify different rules for specific user agents, allowing some bots full access while restricting others.

This granular control helps optimize your site’s crawl budget and resource usage.

FAQs About Robots.txt

Is Robots.txt The Same As Noindex?

No, robots.txt prevents crawling of specified pages, while noindex allows crawling but prevents indexing in search results.

Can Search Engines Ignore Robots.txt Files?

Yes, some search engines and web archiving projects may ignore robots.txt directives.

Can Robots.txt Prevent Duplicate Content Issues?

No, robots.txt cannot prevent duplicate content; it only restricts crawling, not indexing or duplication itself.

Can Robots.txt Be Used To Block Specific Crawlers?

Yes, robots.txt can specify which crawlers are allowed or disallowed from accessing certain parts of a website.

Can Robots.txt Prevent Or Control Link Indexing?

No, robots.txt cannot control link indexing; it only instructs crawlers on which pages to access.

Why Should You Insert a Sitemap URL in Robots.txt?

Inserting a sitemap URL in robots.txt helps search engines quickly locate your sitemap, improving the efficiency of crawling and indexing your website’s important pages.

Affiliate Disclosure: Some of the links in this post are affiliate links, which means I may earn a small commission if you make a purchase through those links. This comes at no extra cost to you. Thank you for your support!