Google indexing is the foundation of online visibility. Without proper indexing, your content cannot rank or be discovered by users, disrupting your ability to build topical authority and drive organic traffic. Common issues like server errors, incorrect meta tags, blocked resources, and duplicate content can significantly hinder crawling and indexing efficiency.

To fix these issues, start by diagnosing them through Google Search Console, ensuring your robots.txt file allows proper crawling, and verifying the accuracy of your XML sitemap. Address broken links, implement appropriate redirects, and create high-quality, unique content tailored to user intent. By implementing these steps, you enhance your website’s technical performance and contextual relevance, ensuring faster indexing and better rankings.

Table of Contents

How Does Google Indexing Work?

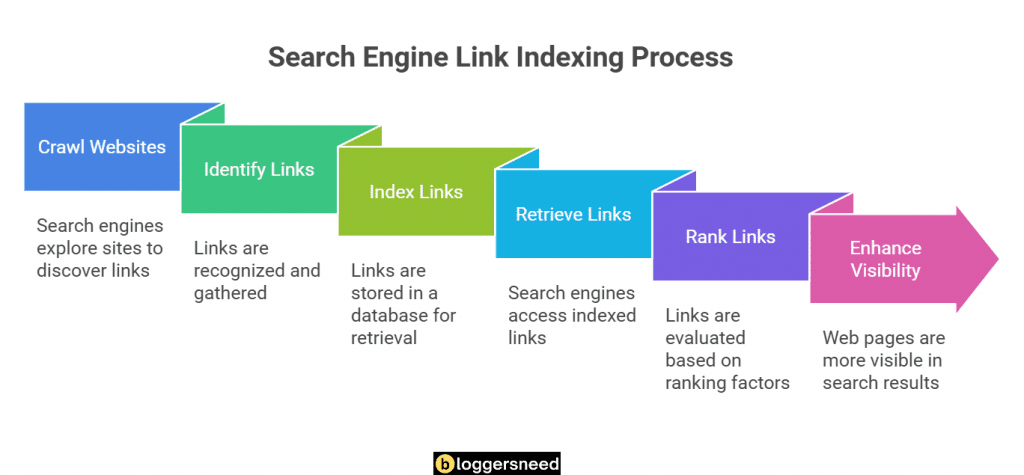

Google indexing is a complex process where web crawlers systematically discover, scan, and catalog web pages across the internet. These specialized bots behavior involves following links, analyzing content, and storing information in Google’s vast database.

Google’s search algorithms prioritize content visibility and page importance by evaluating key factors such as page titles, meta descriptions, headings, and content quality, while also considering user experience signals and technical aspects of the website during the indexing process.

The crawlers continuously update their index by revisiting pages at varying frequencies, depending on factors such as site authority, update frequency, and content relevance.

What Are Common Causes of Google Indexing Issues?

Common Google indexing issues typically cause from multiple technical problems, including server errors, broken redirects, and poor site architecture that prevent search engines from properly crawling and indexing web content.

Content-related challenges such as duplicate content, thin content, and low-quality pages can greatly impact Google’s willingness to index specific URLs or entire websites.

Website owners frequently encounter crawlability obstacles due to robots.txt restrictions, incorrect meta robots tags, problematic XML sitemaps, and complex redirect chains that can hinder efficient indexing.

Technical Errors

Technical errors represent two primary obstacles that can prevent Google from properly indexing website content.

The first major issue involves blocked resources, which occur when indexing algorithms encounter restricted access to CSS, JavaScript, or images vital for rendering pages. This can greatly impact the crawl rate and overall page speed performance.

The second issue cause from Server errors like 500 internal server errors and timeouts prevent Google’s crawlers from accessing and processing your content effectively, which disrupts mobile optimization and proper indexing.

Content Quality Problems

Duplicate content and thin content are two key issues that affect effective indexing, impacting overall content quality and search engine visibility.

These issues considerably impact content freshness and user engagement metrics, leading to poor indexing performance.

Duplicate content occurs when the same or very similar text appears on multiple URLs, leading to confusion for search engines regarding which version to prioritize, ultimately diluting relevance and hindering content diversity.

Thin content, characterized by pages with minimal substance or value, fails to meet audience targeting objectives and often lacks the depth necessary for proper indexing.

To address these issues, websites must focus on creating unique, thorough content that serves a clear purpose.

This involves maintaining proper content freshness through regular updates, ensuring adequate word count, and providing valuable information that genuinely addresses user needs.

Crawlability Challenges

Crawlability challenges hinder effective link indexing due to misplaced noindex tags that block essential pages and a disorganized site structure that impedes navigation, making it difficult for Google to discover and process content. Additionally, slow server response times can deprioritize crawling of slow-loading pages, while dynamic content requiring JavaScript rendering complicates indexing further. Moreover, with Google’s mobile-first indexing approach, implementing mobile optimization strategies is crucial for ensuring proper site evaluation.

A comprehensive analysis of crawl depth reveals significant obstacles that hinder user experience and search visibility, necessitating a systematic approach to ensure efficient crawling and indexing of essential content by search engines.

Sitemap Issues

Sitemap issues such as outdated URLs, incorrect update frequencies, and improper formatting directly impact crawling efficiency and indexation rates.

To solve sitemap issues, webmasters should maintain accurate timestamps, ensure all URLs are accessible, and keep file sizes within recommended limits. This implementation helps search engines efficiently discover and index website content.

Regular monitoring for errors, maintaining proper XML syntax, and updating sitemaps when content changes are vital steps. Additionally, implementing separate sitemaps for different content types and verifying successful submission through Google Search Console helps maintain ideal indexing performance.

Redirect Problems

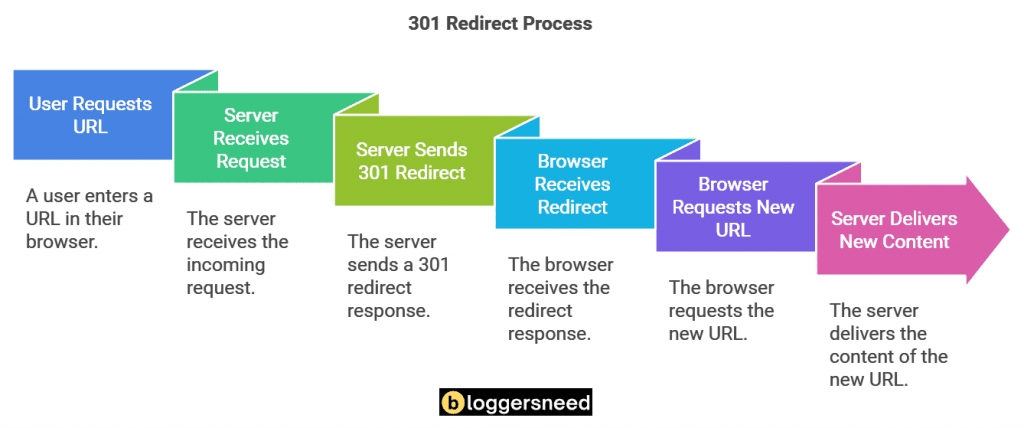

Redirect problems, such as chains and loops, complicate a website’s link structure and delay Google’s ability to crawl and index content efficiently.

Conducting an SEO impact analysis is essential when applying domain redirects, as it helps identify potential indexing issues before they arise. Among the different redirect types, 301 redirects are ideal for permanent URL changes; however, it is important to avoid excessive redirect chains, which can affect crawling efficiency and deplete your site’s crawl budget.

Following redirect best practices, including regular monitoring of redirect patterns, is essential for maintaining optimal SEO performance and ensuring a positive user experience.

How Can You Diagnose Indexing Issues?

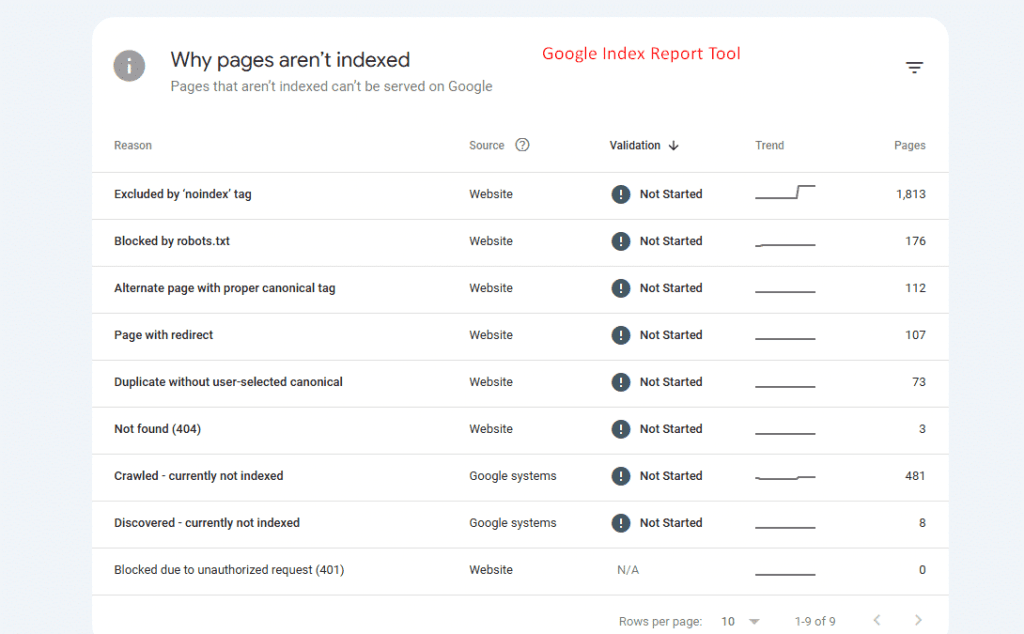

Diagnosing Google indexing issues starts with a thorough examination using Google Search Console, which provides detailed reports about your website’s indexing status and potential problems.

Professional indexing tools like Screaming Frog and Sitebulb can supplement Search Console data by crawling your site to identify technical barriers preventing proper indexation.

These diagnostic methods help webmasters pinpoint specific indexing obstacles, from robots.txt restrictions to crawl budget limitations, allowing for targeted solutions to improve search visibility.

Using Google Search Console

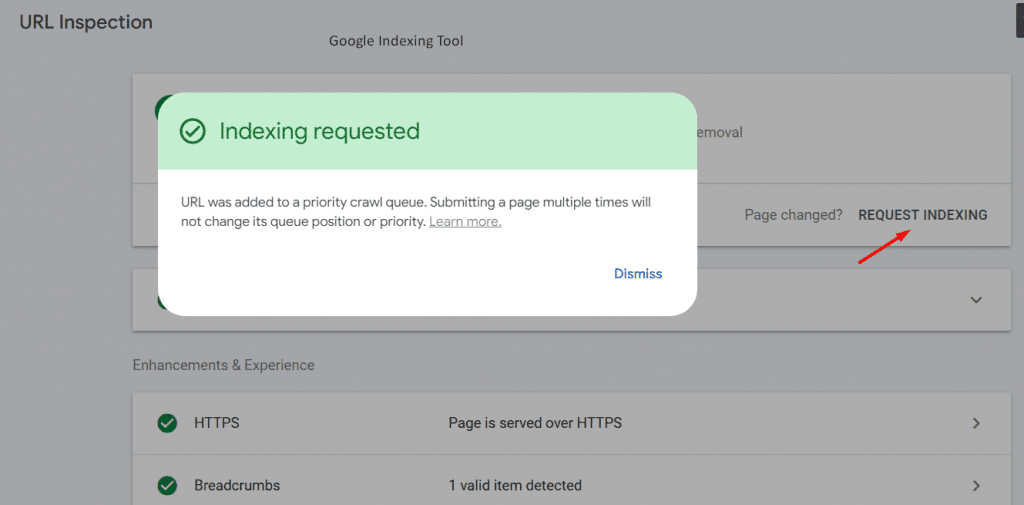

Through Google Search Console, website owners can effectively diagnose indexing issues using two primary tools: the URL Inspection Tool and the Index Coverage Report.

Following indexing best practices, these tools provide thorough insights into how Google crawls and indexes your website.

The URL Inspection Tool is essential for optimizing URL structures and implementing structured data, as it identifies specific technical issues on individual pages.

The Index Coverage Report provides a comprehensive overview of your site’s indexing status, crucial for managing crawl budgets effectively and enhancing search visibility by identifying patterns that maintain healthy indexing.

Integrate mobile indexing strategies and monitor both tools regularly to diagnose indexing issues.

Using Indexing Tools

Beyond Google Search Console, several third-party indexing tools offer additional capabilities for diagnosing and monitoring website indexing issues.

Webmasters can enhance their site performance and resolve indexing issues using popular indexing tools like Url Monitor, Indexguru, and Omega Indexer, which offer advanced indexing strategies and detailed performance analysis for tracking URL visibility and analyzing indexing patterns.

These indexing and monitoring tools deliver real-time alerts and detailed reports on indexing status, including crawling patterns, indexation rates, indexed links, and de-indexed links, enhancing user experience.

What Are Solutions to Common Indexing Issues?

Resolving Google indexing issues requires a systematic approach focused on both technical and content-related solutions.

Website owners can address common indexing problems by fixing technical errors like broken links and improving site architecture, while simultaneously enhancing content quality through proper keyword optimization and regular updates.

The implementation of regularly updated XML sitemaps, effective management of 301 redirects, and ensuring ideal crawlability through improved internal linking structures can greatly boost indexing performance.

Fixing Technical Errors

Technical errors that prevent proper Google indexing can be systematically addressed through the following solutions.

- Conduct a Thorough Technical Audit: Identify critical issues affecting crawlability and indexation.

- Monitor Error Logs Regularly: Detect server response problems and infrastructure bottlenecks.

- Enhance Website Performance: Fix broken links, address redirect chains, and resolve server configuration issues.

- Verify Robots.txt and XML Sitemaps: Ensure proper implementation of directives and maintain ideal crawl budget allocation.

- Monitor and Address Server Response Issues: Keep an eye on server response times and promptly resolve 4xx and 5xx errors.

- Improve User Experience: Optimize page load times, ensure mobile responsiveness, and use clean URL structures.

- Implement Schema Markup Correctly: Help search engines better understand your content’s context and relevance.

Enhancing Content Quality

Creating website with quality content serves as the foundation for successful Google indexing.

To enhance content quality, use clear headings, short paragraphs, and well-structured information. Organize content hierarchically with main points and supporting details, implementing proper HTML markup to help search engines understand your content’s structure.

Optimize keywords strategically while maintaining natural flow, ensuring terms are contextually relevant and evenly distributed.

Boost user engagement through multimedia integration, incorporating relevant images, videos, and infographics that complement your written content.

Maintain mobile responsiveness across all content elements, as Google prioritizes mobile-first indexing.

Regular content audits and updates guarantee continued relevance and maintain strong indexing signals for Google’s crawlers.

Improving Crawlability

Effective crawlability improvements begin with identifying and addressing common indexing obstacles and using crawl optimization techniques like implementing XML sitemaps, optimizing robots.txt files, and fixing broken internal links.

Enhancing site architecture with logical URL structures and clear navigation improves search engine content understanding, while optimizing page load speeds through image compression, browser caching, and code minimization is crucial for user experience.

Implementing mobile-friendly designs with responsive layouts and optimized viewport configurations is essential for accessibility.

Implementing schema.org vocabularies enhances search engines’ understanding of articles, products, and organizations by providing structured data markup that clarifies content relationships and context.

Finally, monitoring crawl budget regularly through Google Search Console helps identify and resolve indexing inefficiencies.

Updating Sitemaps Regularly

Regular sitemap maintenance is a fundamental solution to common indexing challenges.

By prioritizing efforts to create a sitemap that aligns with your website’s structure and content updates, you can ensure smooth search engine navigation

Updating your sitemap frequently through automated updates, XML and RSS feeds, search engine submissions, validation checks, and performance monitoring helps maintain consistent crawling patterns.

This ensures search engines receive accurate, real-time information about your website’s structure and content changes.

Additionally, implementing dynamic sitemap generation for large websites guarantees that newly published content gets discovered and indexed promptly by search engine crawlers.

Managing Redirects Effectively

Proper redirect management plays an essential role in maintaining healthy website indexation and preserving SEO value across URL changes. Implementing 301 redirects correctly guarantees that link equity transfers to new pages while preventing confusion for both users and search engines.

To optimize redirect implementation, avoid creating redirect chains that force browsers through multiple hops before reaching the destination URL. Similarly, eliminate redirect loops that create endless cycles of redirections. While temporary redirects serve specific short-term purposes, they shouldn’t be used for permanent URL changes.

Following redirect best practices includes regularly auditing redirects, maintaining a thorough redirect map, and promptly addressing any broken redirections, helps maintain site authority, reduces crawl budget waste, and guarantees smooth user navigation results in fast indexing of links.

Using High Performance Hosting

High-performance hosting is essential for improving Google indexing, as it utilizes high-speed servers and ample bandwidth to ensure maximum uptime. This reliability allows search engine crawlers to access and process web pages efficiently, leading to more frequent and effective indexing.

The strategic selection of server location plays a vital role in reducing latency and improving crawl efficiency across different geographical regions.

Professional customer support from hosting providers guarantees quick resolution of technical issues that might impact crawling and indexing. Quality hosting solutions also offer features like server-side caching, content delivery networks, and automated backup systems to maintain stable performance and prevent indexing disruptions.

What Are Advanced Strategies for Ensuring Indexing?

There are three types of advanced indexing strategies for links.

JavaScript optimization, ensuring search engines can effectively crawl and index dynamic content through proper implementation of dynamic rendering solutions.

Regular monitoring of core web metrics, crawl stats, and indexing coverage through Google Search Console provides essential insights into potential indexing roadblocks and areas for improvement.

When faced with manual actions from Google, webmasters must promptly address the underlying issues through careful analysis of the Manual Actions report, implementation of necessary fixes, and submission of thorough reconsideration requests.

Optimizing JavaScript Content

Successfully optimizing JavaScript content for Google indexing requires implementing sophisticated techniques that go beyond basic SEO practices.

Key JavaScript rendering techniques include server-side rendering (SSR) and dynamic rendering, which guarantee search engines can effectively crawl and index JavaScript-generated content.

Implementing SEO friendly frameworks like Next.js or Gatsby, combined with strategic lazy loading strategies, helps balance user experience with crawler accessibility.

Structured data implementation becomes vital when working with JavaScript-heavy sites, as it provides clear signals to search engines about content relationships and hierarchy.

Optimizing page speed through code splitting, minification, and efficient resource loading guarantees JavaScript doesn’t impede crawling and indexing processes.

Critical considerations include reducing initial payload size, implementing proper error handling, and utilizing modern JavaScript features while maintaining backwards compatibility for search engine bots.

Monitoring Performance Metrics

Effective search engine optimization depends on real-time monitoring of indexing performance metrics, such as indexing speed and search visibility. By closely tracking these key indicators, webmasters can quickly identify and address crawling issues that might affect rankings.

Establishing performance benchmarks facilitates systematic evaluation of content updates and their influence on user experience. Key metrics to monitor include crawl rate, indexed page count, and crawl errors, with tools like Google Search Console offering essential insights into search engine interactions with your content.

Regular analysis and monitoring of mobile indexing stats, Core Web Vitals, and crawl budget allocation guarantees optimal indexing performance for your site’s content while preserving search engine efficiency.

Handling Manual Actions from Google

When Google issues manual actions against a website, swift and methodical responses become necessary to maintain indexing health and search visibility. Understanding manual action reasons, from unnatural links to thin content, enables webmasters to implement targeted recovery strategies overview plans.

To effectively resolve manual action issues, prioritize removing problematic content, disavowing toxic links, and enhancing content quality while strengthening technical compliance, followed by conducting impact analysis, documenting violations, implementing corrective measures, and preparing a detailed reconsideration request.

The appeal process steps require clear evidence of issue resolution, including before-and-after comparisons and preventive measures implemented.

How to Monitor Link Indexing?

Monitoring link indexing effectively requires specialized tools designed to track the status of your URLs in Google’s index.

Google Search Console serves as a primary monitoring solution, offering detailed reports on indexed pages and enabling custom alerts for crawling or indexing issues.

Additional third-party indexing tools like ContentKing, Screaming Frog, and Sitebulb can complement Search Console by providing real-time monitoring and automated indexing checks across your website.

Using Indexing Monitoring Tools

Tools for tracking Google’s indexing status have become essential for website owners and SEO professionals who need to maintain visibility in search results.

Following indexing best practices requires a thorough monitoring tools overview to guarantee peak performance.

When choosing the right tools, consider solutions like Google Search Console, Screaming Frog, and Sitebulb that offer deep crawl analysis capabilities.

These platforms excel at analyzing indexing data through automated reporting features, providing insights into crawl errors, indexation rates, and coverage issues. Advanced monitoring tools can track URL status changes, detect crawl anomalies, and generate detailed reports on indexing performance.

Regular monitoring helps identify potential problems before they impact search visibility, allowing for swift remediation of indexing issues through data-driven decisions and systematic enhancement efforts.

Setting Up Alerts in Google Search Console

Setting up alerts in Google Search Console involves four essential steps to maintain consistent monitoring of link indexing status.

- First, start by adding your website to Google Search Console.

- Second, access the notification settings panel and configure performance alerts based on your site’s specific requirements.

- Third, establish indexing thresholds that trigger notifications when pages fall below desired indexing levels or encounter crawl errors.

- Finally, customize search console updates to receive timely alerts about significant changes in indexing patterns, coverage issues, or manual actions.

These alert configurations can be adjusted for daily, weekly, or real-time monitoring depending on your site’s size and monitoring needs.

When setting thresholds, consider factors like typical indexing fluctuations and site update frequency to avoid alert fatigue while ensuring critical issues are promptly detected and addressed through the notification system.

Conclusion

Google indexing success requires a strategic, multi-faceted approach combining technical optimization, quality content, and consistent monitoring. Implementing proper XML sitemaps, resolving crawl errors, optimizing robots.txt files, and maintaining clean site architecture form the foundation for effective indexing. Regular audits, coupled with proactive measures like internal linking optimization and strategic content updates, guarantee sustained visibility in search results. Monitoring tools and search console data provide essential insights for maintaining peak indexing performance.

Affiliate Disclosure: Some of the links in this post are affiliate links, which means I may earn a small commission if you make a purchase through those links. This comes at no extra cost to you. Thank you for your support!