Ever wondered how search engines decide which links to trust and rank? Link indexing is the essential process where search engines discover, analyze, and catalog hyperlinks across the internet. By evaluating link attributes such as anchor text, destination URLs, and even your site’s technical setup, search engines determine your site’s authority and relevance. Factors like domain age, content quality, and performance can make or break indexing success. Understanding how this process works is your first step to optimizing your site’s visibility in search results.

Table of Contents

What Is Link Indexing?

Link indexing is the process by which search engines discover, crawl, and catalog hyperlinks to understand the relationships between web pages.

This fundamental SEO practice involves techniques like using modern link indexing tools to monitor link health, identify broken links, and analyze patterns across domains. The benefits include improved visibility, enhanced authority, and a better understanding of content hierarchies. However, challenges like managing duplicate content and orphaned pages require careful planning. By balancing technical optimization, quality content, and strategic link building, website owners can achieve sustainable organic growth..

Why Is Link Indexing Important?

Link indexing is crucial because it allows search engines to discover and rank your content effectively, improving visibility and authority in search results. Proper indexing boosts website visibility, helps search engines understand your content hierarchy, and enhances your site’s credibility by ensuring backlinks are accounted for. Without it, valuable pages may remain invisible, reducing traffic. By ensuring proper link indexing, you can maximize your website’s ranking potential and drive sustainable growth.

Additionally, proper indexing speed improvement can accelerate the rate at which new or updated content becomes visible in search results, leading to a measurable impact on traffic and user engagement.

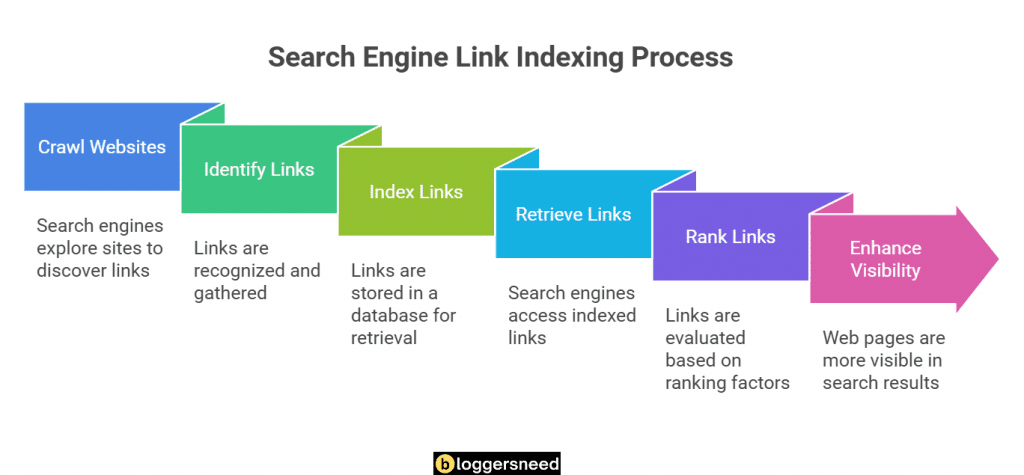

How Does the Link Indexing Process Work?

Link indexing follows a systematic process that begins with search engine crawlers discovering and following links across websites to map the interconnections between web pages.

During analysis, search engines evaluate key link attributes including anchor text, destination URLs, and surrounding context before storing this data in massive indexes that track the relationships between pages.

Once indexed, links become part of the search engine’s knowledge graph, allowing the engine to understand website hierarchies, determine page authority, and deliver relevant results to users’ queries.

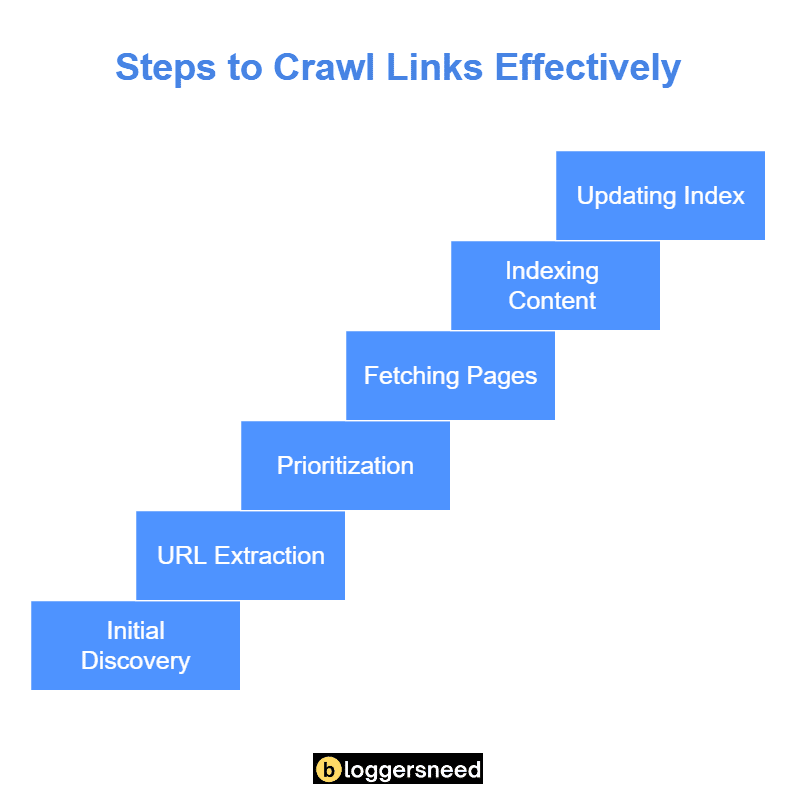

What Are the Steps Involved in Crawling Links?

When web crawlers begin indexing links, they follow a systematic process that involves multiple coordinated steps.

- Identify Seed URLs: Advanced crawling algorithms start by identifying seed URLs, which serve as the initial points for link discovery.

- Initiate Link Discovery Phase: The crawler scans the HTML code of web pages to detect both internal and external links.

- Determine Crawl Frequency: The crawler assesses factors like page importance and update patterns to establish how often to revisit a page.

- Establish Crawl Depth Parameters: It decides how many levels deep to explore from the original URL, determining the breadth of the crawl.

- Prioritize URLs: To optimize crawling efficiency, the system prioritizes URLs using various metrics, including domain authority and link relevance.

- Process robots.txt Files: The crawler reads robots.txt files to respect site directives regarding which pages can or cannot be crawled.

- Manage Crawl Budgets: It allocates a crawl budget, which is the number of pages that can be crawled within a certain timeframe.

- Handle Technical Aspects: The crawler manages technical elements such as URL normalization and duplicate detection to ensure accurate indexing.

- Extract Critical Link Attributes: During the crawl, it extracts and stores important link attributes like anchor text, target URL, and surrounding context.

- Evaluate Link Quality Signals: The crawler checks for broken links, redirects, or potential spam patterns to assess the quality of links.

- Maintain Resource Efficiency: This comprehensive approach guarantees thorough link discovery while optimizing resource use.

- Respect Web Server Limitations: The crawler implements controlled crawl rates to avoid overwhelming web servers and ensure smooth operations.

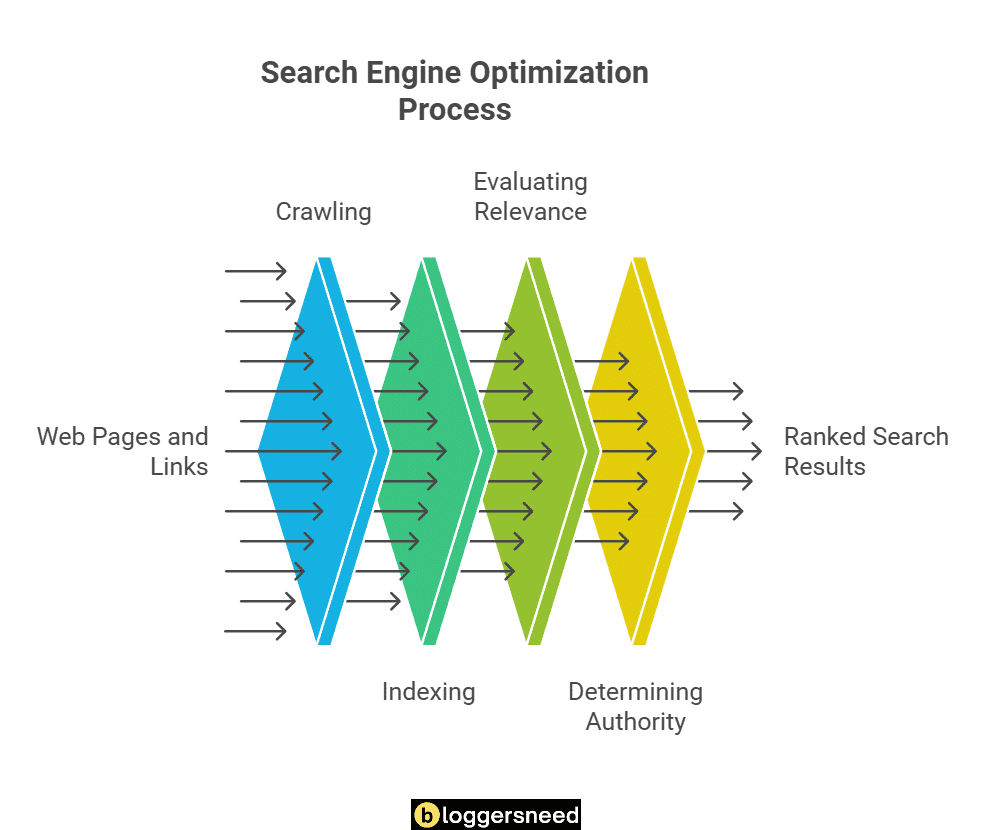

How Do Search Engines Analyze and Store Links?

Search engines evaluate link value and influence by analyzing anchor text, surrounding content, and the relevance of the linking page’s topic. They implement efficient indexing strategies to categorize and store link data, allowing for a better understanding of authority flow between pages. This process helps determine link importance and its impact on search rankings.

Additionally, optimizing the crawl budget ensures that search engines focus on the most valuable links. The storage infrastructure utilizes distributed databases to maintain up-to-date information on link relationships and authority metrics, ensuring fresh and accurate link indexes that enhance ranking algorithms.

What Happens After Links Are Indexed?

Search engines prioritize incorporating link data into ranking algorithms once indexing is complete. They continuously evaluate indexed links for value and influence on search rankings, considering factors like link visibility, authority, and relevance. Indexing speed is affected by site authority, content freshness, and crawl frequency.

Regular backlink evaluation ensures indexed links meet quality standards and maintain relevance, filtering out spam while supporting valuable content connections. In this post-indexing phase, search engines map page relationships, analyzing metrics such as click-through rates, user engagement, and link decay to refine ranking signals.

The data from evaluation helps understand content hierarchy, topic relevance, and domain authority, ensuring search results are current, relevant, and valuable, preserving the integrity of the link graph.

What Factors Influence Link Indexing?

Several key factors play an essential role in determining how search engines index backlinks to websites.

The quality and authority of the linking site greatly impact indexing speed and persistence, while the relevance between the linked content and the destination page serves as a critical ranking signal.

Technical aspects such as proper HTML implementation, server response codes, and crawlability of the linking page directly affect whether search engines successfully discover and process the links.

Quality of the Linking Site

The quality of the linking site determines whether search engines will index backlinks. Search engines evaluate a website’s link authority and overall site reputation before deciding to crawl and index links from that domain. Sites with established domain trustworthiness, consistent publishing history, and natural linking patterns are more likely to have their outbound links indexed quickly.

Sites with a history of spam, manipulative linking practices, or thin content typically struggle to get their outbound links indexed. To gain Google’s trust, publish well-researched, high-quality content. These content pieces generally receive preferential treatment over links from low-value pages or questionable locations like footers and widgets.

Content Relevance

Content relevance is crucial for search engines to decide on backlink indexing. Through sophisticated semantic analysis, search engines evaluate the alignment between the linking page’s content and the destination page to ensure meaningful connections for users. This quality assessment considers dimensions like keyword relevance and the contextual role within the linking page. Search engines favor indexing when content themes naturally align, such as a food blog linking to a recipe website, as opposed to an automotive site. User engagement metrics, such as bounce rates, time on page, and behavior patterns, also impact content relationship perceptions.

To optimize for content relevance, verify that linking pages share topical alignment, maintain consistent themes throughout the content, and provide genuine value to users seeking information within that subject area.

Technical Issues

Various technical factors can greatly impact whether search engines successfully index backlinks, ranging from server configuration issues to improper HTML implementation.

Common link errors, such as broken or dead links, can prevent crawlers from discovering and indexing important backlinks. Additionally, inefficient crawl budget allocation may result in search engines failing to process all links on a website, particularly on large sites with complex architectures.

Redirection issues pose another significant challenge, as improper implementation of 301 redirects or 302 redirects can lead to link equity loss and indexing delays. When multiple URLs point to the same content, incorrect canonical tags can confuse search engines about which version to index, potentially causing valuable backlinks to go unnoticed.

Server response times, robots.txt configurations, and XML sitemap accuracy also influence link indexing efficiency.

To optimize link indexing, webmasters should regularly monitor server performance, implement proper HTTP status codes, maintain clean URL structures, and guarantee consistent internal linking patterns.

Regular technical audits can identify potential bottlenecks affecting link discovery and indexation, allowing for timely remediation of issues that could impact search visibility.

What Techniques Can Help Speed Up Link Indexing?

Several proven techniques can accelerate the indexing process for new backlinks and web pages.

Manual submission tools, including Google Search Console and Bing Webmaster Tools, allow website owners to directly request crawling and indexing of specific URLs.

Building a robust network of internal links and leveraging ping services that notify search engines about content updates work together to enhance the speed and efficiency of link indexing.

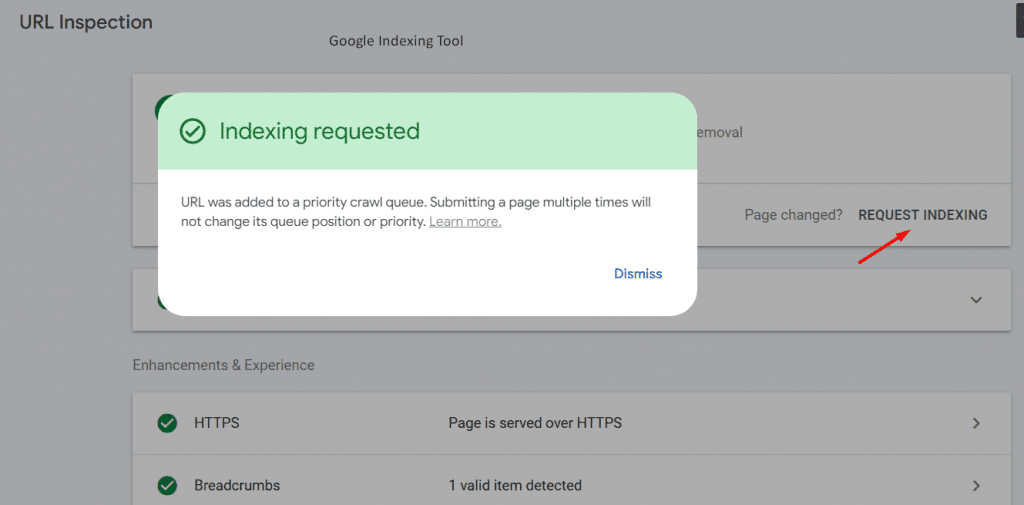

Using Manual Submission

Manual submission remains one of the most direct approaches to expedite link indexing across search engines. By implementing effective manual submission strategies, webmasters can actively control when and how their links are presented to search engines for indexing. The benefits of submission include faster indexing times, increased visibility, and better control over how content appears in search results.

When comparing submission tools, popular options like Google Search Console and Bing Webmaster Tools offer reliable platforms for direct URL submission. However, understanding submission frequency tips is essential – excessive submissions can trigger spam filters, while infrequent submissions may delay indexing. Most experts recommend submitting new URLs within 24-48 hours of publication.

To avoid common submission mistakes, verify URLs are properly formatted, accessible, and contain no blocking directives in robots.txt files. Additionally, confirm that submitted pages contain valuable content and meet search engine quality guidelines.

Implementing a systematic approach to manual submission, combined with proper technical optimization, can greatly reduce indexing delays and improve overall search visibility.

Using Ping Services

Ping services represent a powerful automation tool for notifying search engines about new or updated content on websites. These automated systems send signals to search engines, prompting them to crawl and index new or modified web pages more quickly than they might through natural discovery processes.

The primary ping services benefits include faster indexing times, improved search engine visibility, and reduced manual effort in content distribution. Popular ping services tools like Ping-O-Matic and Google’s XML Sitemap ping feature enable webmasters to automate the notification process seamlessly.

When considering ping service frequency, experts recommend using pinging services whenever content is published or significantly updated, while avoiding excessive pings that could be considered spam.

Ping services automation can be integrated into content management systems like WordPress, which automatically notify search engines upon publishing new posts.

While ping services effectiveness varies among different search engines, implementing a strategic pinging approach generally results in faster crawling and indexing of web content. For ideal results, combine ping services with other indexing techniques like XML sitemaps and quality backlinks to create a thorough indexing strategy.

Building Internal Links

Building internal links serves as a powerful complement to ping services in accelerating link indexing. By implementing strategic internal linking best practices, websites can create a robust network of interconnected pages that search engines crawl more efficiently. This organized link structure helps distribute authority throughout the site while enhancing user experience. If you have a WordPress website, consider using one of the plugins from this list of best internal link builders.

Effective internal link strategies focus on optimizing anchor text with relevant keywords and descriptive phrases that provide clear context about destination pages. Website owners should prioritize creating logical content hierarchies, linking related articles and resources to establish strong topical clusters. This approach not only aids search engine crawlers but also helps users navigate through relevant content more intuitively.

Key techniques include linking from high-authority pages to boost newer content, maintaining consistent navigation structures, and using breadcrumb trails.

When implementing internal linking, focus on creating natural connections between topically related pages rather than forcing links artificially. Additionally, regularly audit internal links to identify and fix broken links, ensuring smooth crawlability and maintaining link equity distribution throughout the site architecture.

How Link Indexing Boosts SEO?

Search engine optimization thrives on effective link indexing, which serves as a powerful way for improving your website’s visibility and rankings. When your backlinks get indexed quickly, You’ll notice several key benefits.

First, you’ll experience faster recognition of your site’s authority by search engines like Google, leading to improved search result positions.

Then, you’ll see increased organic traffic as these indexed backlinks create clear pathways for users to discover your content.

You’ll also gain a competitive edge over others whose links aren’t indexed as efficiently.

Additionally, your site’s credibility grows as Google interprets these indexed backlinks as endorsements from other websites.

The faster indexing process also helps search engines crawl your site more effectively, ensuring all your pages are discovered and properly evaluated.

How Links Fail to Be Indexed?

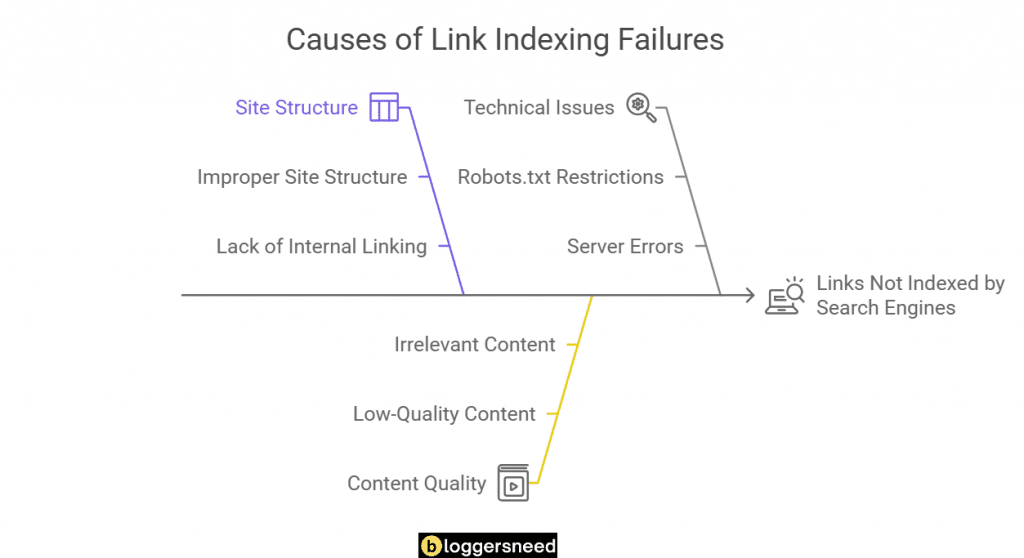

Link indexing faces several critical challenges that can prevent search engines from properly discovering and cataloging backlinks, including technical barriers like robots.txt restrictions, no-index, nofollow attributes, and JavaScript-rendered content.

Search engine crawlers like Google often fail to index links due to poor site architecture, slow server response, slow page load times, and inaccessible content behind login walls.

These indexing obstacles can be addressed through strategic solutions like XML sitemaps, internal linking optimization, and ensuring crawl budget efficiency through proper technical SEO implementation.

What Solutions Can Help Overcome Common Indexing Issues?

Leading SEO tools like Ahrefs, Semrush, and Google Search Console provide thorough link indexing and monitoring capabilities that help track indexed backlinks, identify indexing issues, and measure overall link health.

These platforms offer features such as index status checking, crawl reports, and link analytics that enable webmasters to quickly spot problems and implement necessary fixes.

What Are Some Recommended SEO Tools for Indexing and Tracking Indexed Links?

When it comes to monitoring and managing indexed links effectively, several best link indexing tools stand out in the industry.

- URL Monitor: Best indexing tool to index, tracks URL status, providing instant alerts for downtime or indexing issues to ensure optimal website performance.

- Index Guru: Automates bulk URL submissions for quick indexing, enhancing visibility and driving organic traffic without manual effort.

- Omega Indexer: Facilitates fast indexing of multiple URLs simultaneously, ensuring extensive site content is recognized promptly by search engines.

- IndexMeNow: Offers instant indexing service, reducing wait time for new content visibility and driving timely traffic to your site.

- Indexing Expert: Provides detailed analytics on URL indexing status, enabling targeted optimization to improve search rankings and increase site traffic.

How Can You Use Link Indexing Tools to Improve Your Strategy?

Implementing link indexing tools strategically requires understanding both their core functionalities and potential limitations.

By integrating backlink analysis with content promotion efforts, you can strengthen your SEO strategy and improve site authority.

Focus on leveraging these tools to identify high-value link building opportunities, monitor indexation rates, and adjust your approach based on performance metrics and competitive insights.

How to De-Index Links?

From a technical standpoint, there are several effective methods to de-index links from search engines.

The primary approaches include using the robots.txt file to block crawler access, implementing noindex meta tags in the HTML header, and utilizing the Google Search Console’s URL removal tool. This method prevents search engine crawlers from accessing specific pages, while noindex meta tags explicitly tell search engines not to index particular content.

For temporary de-indexing, the Google Search Console provides the quickest solution, removing URLs within 24 hours. Additional techniques include using the X-Robots-Tag HTTP header, employing canonical tags to consolidate duplicate content, and submitting URL removal requests through webmaster tools.

Affiliate Disclosure: Some of the links in this post are affiliate links, which means I may earn a small commission if you make a purchase through those links. This comes at no extra cost to you. Thank you for your support!